AI Ethics: Accountability – Can We Achieve it?

Accountability is an AI ethics term used by Microsoft, Google, Meta, and Palantir [Not a term used by Nvidia, Amazon, Boston Dynamics, or IBM, and I can’t find AI ethics statements for X, Tesla, Roblox, ….].

In terms of countries – the EU, and the USA does not use the word. China, South Korea, India, Japan, and Singapore do [Global South vs North?]. The EU AI Act focuses on TRANSPERANCY , which is closely aligned with accountability, but unfortunately, it may appear important only for high-risk systems (EU AI Act Art 13, 52).

It is not enough to say Accountability. We need HUMAN ACCOUNTABILITY. What does human accountability mean? It means understanding which HUMAN is accountable for the AI being deployed - especially if it fails to work as intended or has a negative impact on people and society that is not envisioned or legally or morally acceptable by rules and conventions (local, national, and global). Not just the organisation is accountable but the human in the organization – preferably including senior management and board members who are responsible for oversight.

So, while many ethics documents cite accountability – it is not easy to achieve. Let me illustrate this with examples.

One of the challenges we face today is third party providers. What is a third party provider? It is those parts of the site not directly within the site owner's control but present with their approval (more here).

Your data is often shared with other AI companies thanks to the quick-fix productivity hacks (and Gen AI is making this worse). If the site owner is accountable, a person is responsible for making the site safer. WHO IS THIS IN YOUR ORGANIZATION?

Look at these university sites – some may be familiar (I am using the free tool by Simon Hearne).

One of the popular third-party providers is Facebook (others are Google, LinkedIn, etc.). If I look at who Facebook shares their data …..well, you get the picture.

The AI value chain needs transparency, and users need to know why the data is being shared, what data is being shared, and how it is being used, stored, and disposed.

Suppose the university of the school uses platforms like Blackboard. It then opens another Pandora’s Box. Hence, a website can easily be linked to 100 third-party providers. Again, who is responsible? The EU AI Act Art 28 addresses this to some degree (but the focus is high risk AI systems).

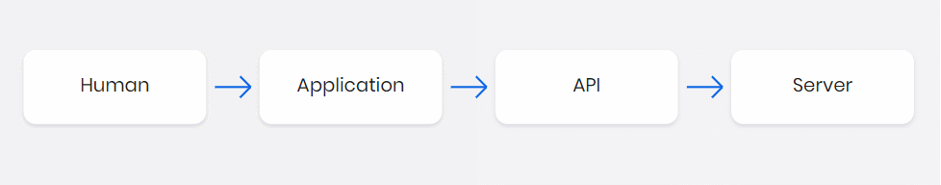

Another way to look at this is to ask the question – how many API calls are there? APIs are methods of communication between different types of software (one of the challenges with the evolution of software languages). See the image from tray.io

APIs are 83% of all internet traffic – the majority coming from loading browsers or using mobile phone applications (which are ‘talking’ to the cloud). 60% of coders use AI to create APIs - thanks to Open AI/Microsoft and the 2 Million API Repositories On GitHub (think accountability). Most APIs security incidents involve data breaches (63%). If you use a smartphone and are browsing on Apps – even for two hours, you are making 10-100 calls/minute or 20,000 API calls per day to the cloud (this was in 2014, so it definitely may have doubled). WHO IS RESPONSIBLE?

Accountability requires transparency in an organization on roles and responsibilities and also transparency that someone outside the organization knows whom to escalate a concern to (no not to the legal department – that is just obscuration)!

Want to know more about AI decision making? Grab our latest book – AI Enabled Business: A Smart Decision Kit.

*Opening Image by Gerd Altmann from Pixabay

Comments